In the previous article, we focused on building a performance testing framework using Gatling. With the foundational setup in place, it's time to explore the various features Gatling offers to create more dynamic and realistic test scenarios. One of the first and most powerful features we'll look into is Feeders.

Feeders in Gatling serve as a dynamic data source for your simulations. They allow you to inject external or generated data into your test scripts, enabling each virtual user to operate with different input values. This is particularly useful when simulating real-world scenarios where users interact with a system in varied ways—such as using unique credentials, different product IDs, or custom request parameters.

Gatling feeders can be loaded from several formats including CSV files, JSON files, or even generated on the fly using in-memory collections or custom logic. They support several feeding strategies like circular, random, and queue-based, giving you control over how data is distributed across virtual users.

By incorporating feeders, you can avoid hardcoding values, scale your test coverage, and identify bugs or performance bottlenecks that only appear with certain inputs. This not only enhances the realism of your tests but also improves the overall reliability and accuracy of your performance testing efforts.

In essence, feeders are essential for data-driven testing in Gatling and form the backbone of simulations that mimic real user behavior at scale.

Data can be loaded from various feeder sources, such as:

- CSV files

- JSON files

- In-memory data structures

- Database

Feeding Strategies in Gatling

Feeding strategies in Gatling determine how test data is retrieved from a feeder and supplied to virtual users during simulation runs. Selecting the appropriate feeding strategy is essential because it directly affects how test inputs are distributed—whether they are reused, randomized, or iterated sequentially—which in turn can influence the realism and accuracy of your test results.

Gatling offers a variety of built-in feeder strategies tailored to support different performance testing needs. These strategies allow for granular control over how data flows through your simulations, enabling testers to mimic real-world user behavior and interaction patterns more closely.

Here’s a breakdown of the major strategies available:

-

queue()– This is the default strategy in Gatling. Data is consumed in the order it appears in the feeder file—starting from the first row and moving sequentially through the rest. It’s ideal for scenarios requiring unique records per user. However, if the feeder runs out of data before the test completes, Gatling will stop execution. -

random()– This strategy randomly selects rows from the feeder, introducing variability into your test without following any particular order. It’s useful when you want to simulate different users making requests with random inputs, helping to avoid repetitive patterns. -

shuffle()– With this approach, Gatling first shuffles the feeder entries to randomize their order and then proceeds like thequeue()strategy. This provides both randomness and structure but, likequeue(), the test will halt once the data is exhausted. -

circular()– The circular strategy loops through the feeder data endlessly. Once Gatling reaches the end of the feeder, it starts again from the beginning. This is particularly beneficial for long-running tests where data reuse is acceptable or expected, ensuring uninterrupted execution regardless of data size.

By understanding and applying these feeder strategies effectively, you can simulate more realistic user interactions and better align your test design with actual business scenarios.

CSV Feeders

CSV Feeders read data from a CSV file and

provide it to the virtual users during the simulation. CSV files should be

placed in the src/gatling/resources or src/test/resources

or src/main/resources directory to be

automatically recognized. In our example, we have used a CSV feed with circular

strategy.

Data from csv or any other feeder can be referred into the script

using Gatling DSL or using sessions API

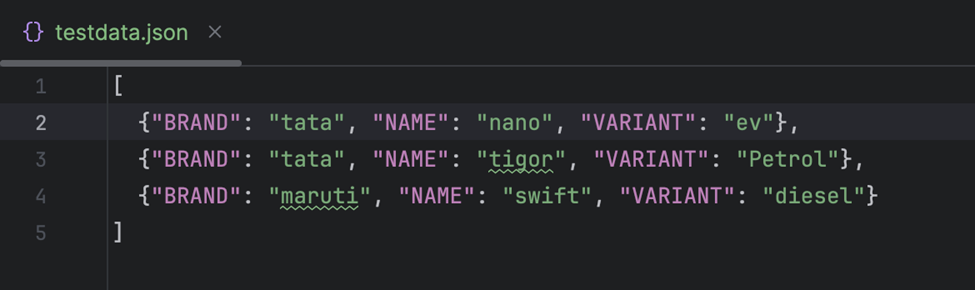

JSON files

JSON files are used to inject data into simulations, just like CSV feeders. You can refer to the data from JSON files like we do from CSV files. To use JSON files use the following code.

In-memory data structures:

In Gatling, in-memory data structures can be used as a source for feeders to inject dynamic data into your simulations. This allows you to avoid external dependencies like CSV files or databases by storing data directly within your code, making it convenient for scenarios where the test data is relatively simple or small-scale.

In-memory feeders are typically data

structures like lists, maps, or arrays and Gatling can feed data from these

structures in a similar way to how it handles external files.

To understand the use of In-Memory I have

created a new endpoint using json server. The endpoint just adds an email and

mobile number to the database.

To inject data into our simulation, in this case, email and mobile we have created an in-memory feeder. This feeder creates an infinite stream of data with the supplied logic and returns a map.

Incase you want to use some predefined data with a list or array you can use a listfeeder or an array feeder as shown here.

Database:

Gatling's JDBC feeder allows dynamic retrieval of test data directly from a relational database, making it ideal for accessing database-stored data during performance testing. For this example, I’ll demonstrate using a PostgreSQL database with a table named contact_details, from which the test data will be read.

To read the data from database use the

following. You can directly refer to data using the column names EMAIL, MOBILE.

The complete source code for this article is available in my GitHub repository—feel free to explore it, clone it, and experiment on your own. That wraps up our discussion for now. In the upcoming article, we’ll continue our journey into Gatling by diving into more advanced features and best practices to enhance your performance testing strategies. Stay tuned for deeper insights, hands-on examples, and tips to level up your test simulations. Until then, keep learning, keep experimenting, and as always—happy testing! 🚀